Why you need a data platform

You need data to do your work. Without data, you’re guessing at what is going on, and your decisions will be based on these guesses. Adding reliable data to the mix will help you to to make better decisions. This applies to all of the people in your company! Not just the senior leadership – but everybody from c-levels through to customer success. Figuring out where to put all of this data and how to manage and use it turns out to be quite challenging. A modern data platform addresses these exact issues.

The vision: an informed team

How much do you rely on gut instincts? Your gut can get you pretty far, but wouldn’t it be nice to have a realistic view on that new feature you’re planning to roll out? There are many ways to get more information, and understanding your users by speaking with them is at the top of that list. Once you have some users, you’ll want to understand how they are actually using your product. This is when you begin to add some instrumentation to your app or website. Now you have data! We’re going to want to ask some questions now. How many users are making it through your signup funnel? How effective is your search system? How well is your recommender engine working?

Unsurprisingly, product is not the only driver throwing up questions about what is actually happening. Let’s take a closer look at who needs to use data at your company.

But other roles in your organisation are also making many decisions every day. Marketing needs to analyse the performance of campaigns. Product managers need to understand user behavior and test their changes.

The reality: a barely informed team

A lot of companies find themselves falling quite short of this vision. Many teams teams produce data in inconsistent ways. Anybody wishing to use somebody else’s data is faced with many difficulties: how to access the data, how to interpret the data, how to understand where fields come from, how they are generated, and what sort of quality levels to expect from the data.

As it turns out, data can be a liability. It can lead to more misunderstandings and less insight. I recently read this New Yorker piece about sophisticated but dysfunctional medical software and found it to be very insightful. Writing about data collected by the Epic medical information system,

… doctors’ handwritten notes were brief and to the point. With computers, however, the shortcut is to paste in whole blocks of information — an entire two-page imaging report, say — rather than selecting the relevant details. The next doctor must hunt through several pages to find what really matters. … The software “has created this massive monster of incomprehensibility,” she said, her voice rising.

These problems apply equally to more familiar domains. I am reminded of various kinds of collective data messes that I have seen over the years.

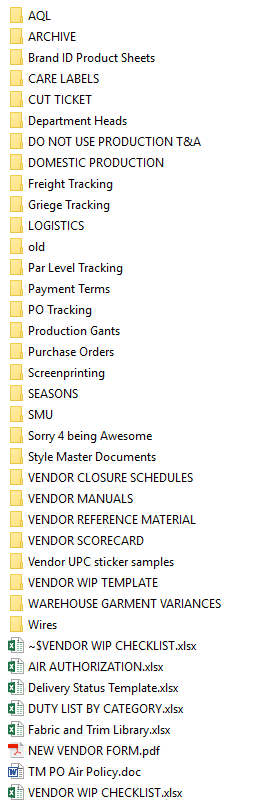

- Maybe you have some shared folders that everybody puts files into, and that is your company’s way of sharing information – an NTFS share, or even something more modern like Dropbox.

- Maybe you have the same thing, but on HDFS. Folders that have not been seen by human eyes until you started to poke around in there.

- Maybe you have a nice data warehouse in the cloud, and teams doing data engineering and BI. But mysteriously, you have landed in a data tar pit where numbers are wrong, and making any changes or introducing new features becomes slow or impossible.

You might not feel like any of this applies to you, because you’re using modern tools to manage your data stack. But even companies that have invested a lot of money into their data stack can wind up here:

… over time the rate of new questions increases rapidly and data teams spend more time updating models and debugging mismatched numbers than answering data questions.

The seeds of chaos

Why might a company with a team full of highly competent people wind up with a data mess? Let’s take a moment for a story. This electric skateboard operator is at the beginning of their incredible journey. Let’s have a look at how they do.

😔 We noticed the report failed for the last 6 weeks. Looks like prod eng made changes to their database, and they didn’t even know that the reporting team exists. Lots of coordination from both teams follows to understand who did what and how to fix it.

Well this clearly isn’t going to work. More generally, we have a group of stakeholders that all produce data, and also want to use data from other stakeholders. Nobody has stepped back to think about this, so we wind up with this sort of situation:

What’s going to happen in this picture? Well it depends on your organisation. Here are a few effects that I have observed at different companies:

- Teams are unwilling to take responsibility for data, or to schedule data work for other teams; they have their own backlogs to worry about

- Nobody knows who to ask about changing or interpreting data. A data consumer might get sent backwards through this graph in order to understand the meaning of a single field. That is, consumers might have to talk to an increasing long chain of data providers, where each cannot answer what a field means.

- Many teams are duplicating efforts to clean data, but in incorrect and incompatible ways

- Data is spread between organisational silos that cannot be consistently accessed

Some of these problems are engineering artefacts:

- Nothing is tested in data land, so any changes to existing artefacts are risky

- There’s a mess of legacy spaghetti ETLs, and there are errors or data is missing

- It is unclear where data comes from, or how to introduce or change attributes

- It is unclear what privacy or compliance attributes the data has

Over time, changes crawl to a halt and it’s increasingly difficult to move quickly. Everything is unstable, and the insights generated from data are highly unreliable.

Creating your own data platform 🌤

To address these problems, we are going to need to understand them a bit better. Let’s restrict ourselves here by zooming out and having a look at how consumers and producers of data might be organised.

What you see in this picture is a decoupling of producers and consumers. In Fig. 2, data mess, the product team of our skateboard company needs to negotiate how to exchange data with the data science team in one way, the BI team in another way, the marketing and product intelligence unit, again, and reports and compliance teams, again. To do this, the product team will have to understand the language, domain and problems of each data client in great detail, and will have to develop and operate specific solutions for each lient.

In Fig. 3, data platform, the product team works on publishing a dataset into the data platform once. Developing the dataset will still require some understanding of the downstream consumers, but there is now a single solution for every consumer.

The data platform is a set of conventions, an agreement to work on things a certain way, and some tech components. People, process and technology are combined to provide a basis for teams to build on: a platform.

But how should this be built exactly? And what are the tradeoffs we’ll have to make? How much effort is it to build this thing, and is it even worth it? Are there off the shelf solutions instead?

Sign up to the FELD newsletter 👇🏽 below to read more about these questions!