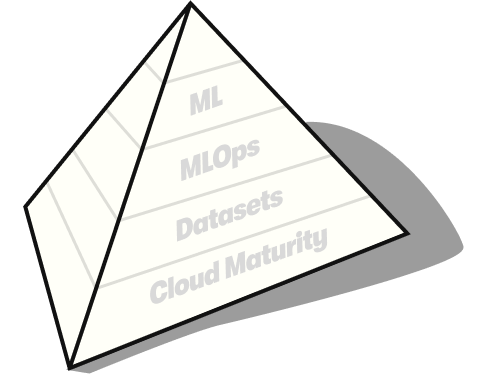

Data Foundations

Data scientist, BI analyst and all your other teams use data. And yes, all of these teams also produce data. So, systems get built, data is produced – and before you know it, you’ve landed in a bit of a mess: you have silos that are hard to access, spaghetti ETLs that shovel data from one place to the next, and nobody can answer where a piece of data came from, what guarantees are attached to it, or if it’s even correct. As the system grows, things begin to grind to a halt. What now? We can work on your data foundations.

Cloud Maturity

Whether you’re on AWS, GCP or Azure, you’ll need a solutions architect to design and build the right cloud infrastructure to support your data work. You’ll need an identity and access management for data, and components to handle data submission, ingest processing, dataset management and data transformation. This is your engineering foundation.

Datasets

Your data should be organised. Datsets should be owned and published with a schema and data dictionary, and the owning team should ensure for correctness. The privacy properties and lineage should be easily discovered. Software engineering principles should be applied to your data and ETLs – testing, code review and continuous delivery should be applied.

MLOps and Testing

Organisations that use machine learning in their products need to rapidly train and test. Offline testing and online testing needs to be conducted and evaluated in notebook for communication to stakeholders. Explorations need to be hosted for team collaboration without getting into a tangle of ad hoc artifacts, and all results should be reproducible at any time.

ML and Data Science

Once the foundations have been laid, your teams can work effectively with data and models. Your models don’t have to be complicated to benefit from solid data foundations. Many of the topics described here can be introduced slowly, and can improve the work of your teams one piece at a time.